Don’t panic! Life imitates art, to be sure, but hopefully the researchers in charge of the Cognitive Architecture for Space Exploration, or CASE, have taken the right lessons from 2001: A Space Odyssey, and their AI won’t kill us all and/or expose us to alien artifacts so we enter a state of cosmic nirvana. (I think that’s what happened.)

CASE is primarily the work of Pete Bonasso, who has been working in AI and robotics for decades — since well before the current vogue of virtual assistants and natural language processing. It’s easy to forget these days that research in this area goes back to the middle of the century, with a boom in the ’80s and ’90s as computing and robotics began to proliferate.

The question is how to intelligently monitor and administrate a complicated environment like that of a space station, crewed spaceship, or a colony on the surface of the Moon or Mars. A simple question with an answer that has been evolving for decades; the International Space Station (which just turned 20) has complex systems governing it and has grown more complex over time — but it’s far from the HAL 9000 that we all think of, and which inspired Bonasso to begin with.

“When people ask me what I am working on, the easiest thing to say is, ‘I am building HAL 9000,’ ” he wrote in a piece published today in the journal Science Robotics. Currently that work is being done under the auspices of TRAC Lab, a research outfit in Houston.

One of the many challenges of this project is marrying the various layers of awareness and activity together. It may be, for example, that a robot arm needs to move something on the outside the habitat. Meanwhile someone may also want to initiate a video call with another part of the colony. There’s no reason for one single system to encompass command and control methods for robotics and a VOIP stack — yet at some point these responsibilities should be known and understood by some overarching agent.

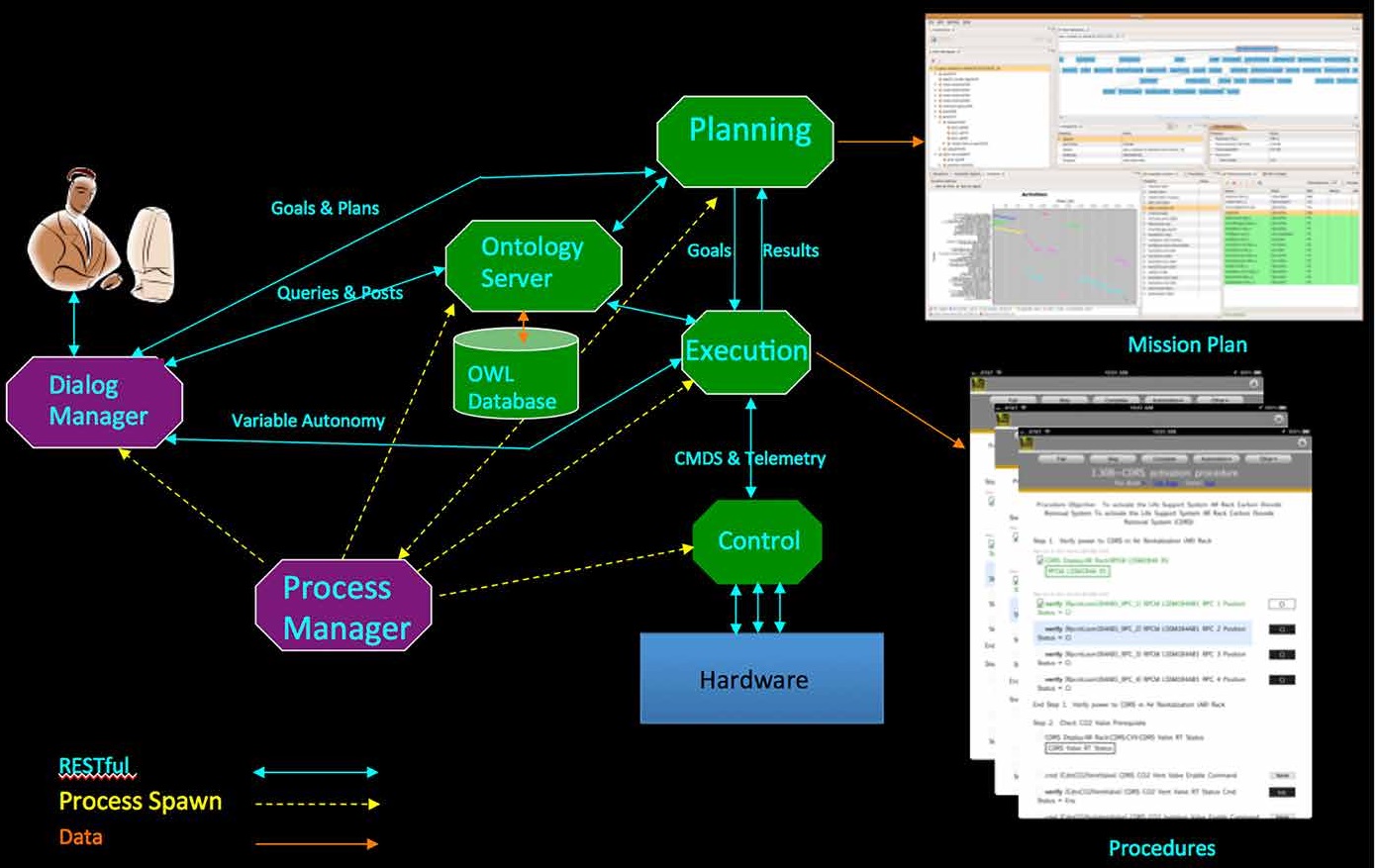

CASE, therefore, isn’t some kind of mega-intelligent know-it-all AI, but an architecture for organizing systems and agents that is itself an intelligent agent. As Bonasso describes in his piece, and as is documented more thoroughly elsewhere, CASE is composed of several “layers” that govern control, routine activities, and planning. A voice interaction system translates human-language queries or commands into tasks those layers can carry out. But it’s the “ontology” system that’s the most important.

Any AI expected to manage a spaceship or colony has to have an intuitive understanding of the people, objects, and processes that make it up. At a basic level, for instance, that might mean knowing that if there’s no one in a room, the lights can turn off to save power but it can’t be depressurized. Or if someone moves a rover from its bay to park it by a solar panel, the AI has to understand that it’s gone, how to describe where it is, and how to plan around its absence.

This type of common sense logic is deceptively difficult and is one of the major problems being tackled in AI today. We have years to learn cause and effect, to gather and put together visual clues to create a map of the world, and so on — for robots and AI, it has to be created from scratch (and they’re not good at improvising). But CASE is working on fitting the pieces together.

“For example,” Bonasso writes, “the user could say, ‘Send the rover to the vehicle bay,’ and CASE would respond, ‘There are two rovers. Rover1 is charging a battery. Shall I send Rover2?’ Alas, if you say, ‘Open the pod bay doors, CASE’ (assuming there are pod bay doors in the habitat), unlike HAL, it will respond, ‘Certainly, Dave,’ because we have no plans to program paranoia into the system.”

I’m not sure why he had to write “alas” — our love of cinema is exceeded by our will to live, surely.

That won’t be a problem for some time to come, of course — CASE is still very much a work in progress.

“We have demonstrated it to manage a simulated base for about 4 hours, but much needs to be done for it to run an actual base,” Bonasso writes. “We are working with what NASA calls analogs, places where humans get together and pretend they are living on a distant planet or the moon. We hope to slowly, piece by piece, work CASE into one or more analogs to determine its value for future space expeditions.”

I’ve asked Bonasso for some more details and will update this post if I hear back.

Whether a CASE- or HAL-like AI will ever be in charge of a base is almost not a question any more — in a way it’s the only reasonable way to manage what will certainly be an immensely complex system of systems. But for obvious reasons it needs to be developed from scratch with an emphasis on safety, reliability… and sanity.

No comments:

Post a Comment